关于 YMatrix

标准集群部署

数据写入

数据迁移

数据查询

运维监控

参考指南

工具指南

数据类型

存储引擎

执行引擎

流计算引擎

灾难恢复

系统配置参数

索引

扩展

SQL 参考

常见问题(FAQ)

YMatrix 支持通过 PXF(Platform Extension Framework)访问 HDFS 中的数据。通过 PXF,YMatrix 可以并行读取 HDFS 上的结构化或半结构化数据,避免数据迁移带来的冗余与延迟,实现“即连即用”的数据分析体验。

本节包括 PXF 直接访问 HDFS 和 PXF 通过 Ranger 访问 HDFS 两种访问方式的使用说明。

若未安装 PXF,可参考PXF 安装章节进行安装。

本部分将详细介绍如何在 YMatrix 中配置与使用 PXF 访问 HDFS。

| 软件 | 版本信息 |

|---|---|

| YMatrix | MatrixDB 6.2.2+enterprise |

| Hadoop | Hadoop 3.2.4 |

| PXF | pxf-matrixdb4-6.3.0-1.el7.x86_64 |

(一)在 Hadoop 创建文件夹(Hadoop 主节点执行)

首先,我们需要在 HDFS 上创建一些目录,以便存放数据文件。这些目录将作为 YMatrix 与 Hadoop 集群之间的数据交换位置。

1.创建 HDFS 根目录下的 /ymatrix 目录。该目录用于存放所有与数据库相关的数据文件。可以通过以下命令创建:

hdfs dfs -mkdir /ymatrix2.在 /ymatrix 下创建 pxf_examples 子目录。该子目录用于存放 PXF 示例数据。通过以下命令创建该子目录:

hdfs dfs -mkdir /ymatrix/pxf_examples

3.列出 HDFS 根目录的内容,验证 /ymatrix 是否已创建。使用 ls 命令列出根目录内容,确保新目录创建成功:

hdfs dfs -ls /4.列出 /ymatrix 目录的内容,验证 pxf_examples 是否已创建。

hdfs dfs -ls /ymatrix/

5.生成测试数据文件 在本地创建一个包含城市、月份、销量和金额的测试数据文件。可以使用以下命令:

echo 'Prague,Jan,101,4875.33

Rome,Mar,87,1557.39

Bangalore,May,317,8936.99

Beijing,Jul,411,11600.67' > pxf_hdfs_simple.txt

6.将生成的本地文件上传到 HDFS 的 /ymatrix/pxf_examples 目录。

hdfs dfs -put pxf_hdfs_simple.txt /ymatrix/pxf_examples/7.查看上传后的文件内容,验证上传成功。

hdfs dfs -cat /ymatrix/pxf_examples/pxf_hdfs_simple.txt8.赋予文件权限

通过 hdfs dfs -chown 命令将文件权限赋给 mxadmin 用户:

hdfs dfs -chown -R mxadmin:mxadmin /ymatrix/pxf_examples/pxf_hdfs_simple.txt(二)在 mxadmin 用户下配置环境变量(所有 YMatrix 节点添加)

为了确保 YMatrix 能够与 Hadoop 集群进行有效的交互,所有 YMatrix 节点需要配置相关的环境变量。

.bashrc 或环境配置文件中,添加以下内容:export HADOOP_HOME=/opt/modules/hadoop-3.2.4

export HADOOP_CONF_DIR=/opt/modules/hadoop-3.2.4/etc/hadoop

export PXF_CONF=/usr/local/pxf-matrixdb4

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH(三)在 Hadoop 集群中加入 mxadmin 用户访问权限(Hadoop 所有节点修改)

为确保 mxadmin 用户能够访问 Hadoop 集群,需要在所有 Hadoop 节点的 core-site.xml 配置文件中进行相应设置。

core-site.xml 文件,加入以下配置:<property>

<name>hadoop.proxyuser.mxadmin.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.mxadmin.hosts</name>

<value>*</value>

</property>(四)创建 single_hdfs 文件夹(所有 YMatrix 机器添加)

在 YMatrix 主节点上创建一个新的文件夹,用于存放 Hadoop 集群的配置文件。

mkdir /usr/local/pxf-matrixdb4/servers/single_hdfs/(五)从 Hadoop 机器拷贝配置文件到 PXF 文件夹(Hadoop 主节点机器往 YMatrix 主节点传输)

将 Hadoop 配置文件传输到 YMatrix 主节点的 PXF 目录。

scp 命令将 Hadoop 配置文件拷贝到 YMatrix 主节点:scp $HADOOP_CONF_DIR/core-site.xml 192.168.50.95:/usr/local/pxf-matrixdb4/servers/single_hdfs/

scp $HADOOP_CONF_DIR/hdfs-site.xml 192.168.50.95:/usr/local/pxf-matrixdb4/servers/single_hdfs/

scp $HADOOP_CONF_DIR/yarn-site.xml 192.168.50.95:/usr/local/pxf-matrixdb4/servers/single_hdfs/(六)创建 pxf-site.xml 文件(YMatrix 主节点创建)

在 YMatrix 主节点上创建 PXF 配置文件 pxf-site.xml,确保 PXF 服务能够正确与 Hadoop 集群连接。

vi /usr/local/pxf-matrixdb4/servers/single_hdfs/pxf-site.xml<?xml version="1.0" encoding="UTF-8"?>

<configuration>

</configuration>(七)修改 core-site.xml 的 fs.defaultFS 配置为 Active NameNode 所在节点 IP,并同步 PXF 配置(YMatrix 主节点执行)

配置 core-site.xml 文件中的 fs.defaultFS 属性,以确保 YMatrix 能够正确连接到 Hadoop 集群的 NameNode。

fs.defaultFS 配置<property>

<name>fs.defaultFS</name>

<value>hdfs://<namenode_ip>:9000</value>

</property>pxf cluster sync(八)在 YMatrix 数据库使用 pxf 插件进行查询(YMatrix 主节点执行)

1.创建插件

在 YMatrix 中启用 PXF 插件,允许 YMatrix 访问 HDFS 数据。

create extension pxf_fdw;2.创建 FDW Server

创建一个外部数据源连接 Hadoop 集群的文件系统。

CREATE SERVER single_hdfs FOREIGN DATA WRAPPER hdfs_pxf_fdw OPTIONS ( config 'single_hdfs' );3.创建 FDW User Mapping

配置 YMatrix 用户与 FDW 服务器的映射。

CREATE USER MAPPING FOR mxadmin SERVER single_hdfs;4.创建 FDW Foreign Table,将 HDFS 上的文件映射到 YMatrix 数据库中。

CREATE FOREIGN TABLE pxf_hdfs_table (location text, month text, num_orders int, total_sales float8)

SERVER single_hdfs OPTIONS ( resource '/ymatrix/pxf_examples/pxf_hdfs_simple.txt', format 'text', delimiter ',' );5.查询外部表

执行查询语句,验证数据是否成功读取。

SELECT * FROM pxf_hdfs_table;(一)创建目录(Hadoop 主节点执行)

在 HDFS 上创建新的目录,用于存放将要写入的数据。

hdfs dfs -mkdir /ymatrix/pxf_dir_examples(二)创建外部表(YMatrix 主节点执行)

在 YMatrix 中创建外部表,并将其指向新的 HDFS 目录。

CREATE FOREIGN TABLE pxf_hdfsdir_table (location text, month text, num_orders int, total_sales float8)

SERVER hdfs_svr OPTIONS (resource '/ymatrix/pxf_dir_examples', format 'text', delimiter ',' );(三)写入数据(YMatrix 主节点执行)

将数据从 YMatrix 的现有外部表 pxf_hdfs_table 插入到新创建的外部表 pxf_hdfsdir_table 中。

INSERT INTO pxf_hdfsdir_table SELECT * FROM pxf_hdfs_table ;(四)查询 Foreign Table 获取目录下所有数据(YMatrix 主节点执行)

执行查询命令以验证数据是否成功写入到新的目录中。

SELECT COUNT(*) FROM pxf_hdfsdir_table;本部分将详细介绍如何使用 PXF 通过 Ranger 管理 HDFS 文件的访问权限。

(一)在 Hadoop 创建文件夹(Hadoop 主节点执行)

首先,我们在 HDFS 上创建一些目录,以便存放数据文件。这些目录将作为 YMatrix 与 Hadoop 集群之间的数据交换位置。

1.创建 HDFS 根目录下的 /ranger 目录

该目录用于存放所有与数据库相关的数据文件。可通过一下命令创建:

hdfs dfs -mkdir /ranger2.列出 HDFS 根目录的内容,验证 /ranger 是否已创建

使用 ls 命令列出根目录内容,确保新目录创建成功。

hdfs dfs -ls /3.生成测试数据文件

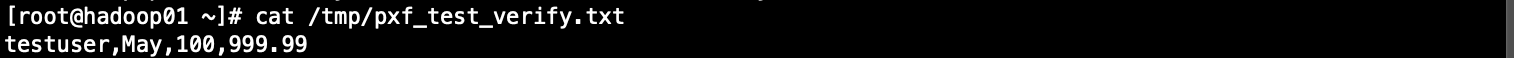

在本地创建一个测试数据文件,可以使用以下命令。

echo "testuser,May,100,999.99" > /tmp/pxf_test_verify.txt

4.将生成的本地文件上传到 HDFS 的 /ranger 目录

使用 hdfs dfs -put 命令将本地文件上传至 HDFS 中。

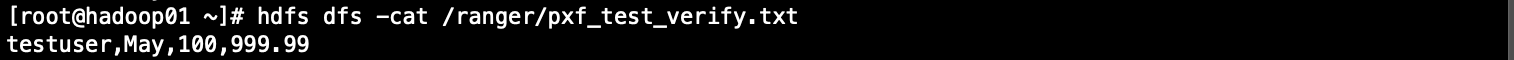

hdfs dfs -put -f /tmp/pxf_test_verify.txt /ranger/5.查看上传后的文件内容,验证上传成功

使用以下命令查看文件的内容,确定文件上传成功。

hdfs dfs -cat /ranger/pxf_test_verify.txt

(二)在 mxadmin 用户下配置环境变量(所有 YMatrix 节点添加)

为了确保 YMatrix 能够与 Hadoop 集群进行有效的交互,所有 YMatrix 节点需要配置相关的环境变量。

在每个 YMatrix 节点的 .bashrc 或环境配置文件中,添加以下内容。

export HADOOP_HOME=/opt/modules/hadoop-3.2.4

export HADOOP_CONF_DIR=/opt/modules/hadoop-3.2.4/etc/hadoop

export PXF_CONF=/usr/local/pxf-matrixdb4

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH(三)在 Hadoop 集群中加入 mxadmin 用户访问权限(Hadoop 所有节点修改)

为确保 mxadmin 用户能够访问 Hadoop 集群,需要在所有 Hadoop 节点的 core-site.xml 配置文件中进行相应设置。

编辑 core-site.xml 文件,加入以下配置。

<property>

<name>hadoop.proxyuser.mxadmin.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.mxadmin.hosts</name>

<value>*</value>

</property>(四)创建 single_hdfs 文件夹(所有 YMatrix 机器添加)

在 YMatrix 主节点上创建一个新的文件夹,用于存放 Hadoop 集群的配置文件。

mkdir /usr/local/pxf-matrixdb4/servers/single_hdfs/(五)从 Hadoop 机器拷贝配置文件到 PXF 文件夹(Hadoop 主节点机器往 YMatrix 主节点传输)

将 Hadoop 配置文件传输到 YMatrix 主节点的 PXF 目录。

使用 scp 命令将 Hadoop 配置文件拷贝到 YMatrix 主节点。

scp $HADOOP_CONF_DIR/core-site.xml 192.168.50.95:/usr/local/pxf-matrixdb4/servers/single_hdfs/

scp $HADOOP_CONF_DIR/hdfs-site.xml 192.168.50.95:/usr/local/pxf-matrixdb4/servers/single_hdfs/

scp $HADOOP_CONF_DIR/yarn-site.xml 192.168.50.95:/usr/local/pxf-matrixdb4/servers/single_hdfs/(六)创建 pxf-site.xml 文件(YMatrix 主节点创建)

在 YMatrix 主节点上创建 PXF 配置文件 pxf-site.xml,确保 PXF 服务能够正确与 Hadoop 集群连接。

1.使用以下命令创建并编辑 pxf-site.xml 文件。

vi /usr/local/pxf-matrixdb4/servers/single_hdfs/pxf-site.xml2.在文件中添加相应的配置内容。

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

</configuration>(七)修改 core-site.xml 的 fs.defaultFS 配置为 Active NameNode 所在节点 IP,并同步 PXF 配置(YMatrix 主节点执行)

配置 core-site.xml 文件中的 fs.defaultFS 属性,以确保 YMatrix 能够正确连接到 Hadoop 集群的 NameNode。

1.修改 fs.defaultFS 配置。

<property>

<name>fs.defaultFS</name>

<value>hdfs://<namenode_ip>:9000</value>

</property>2.同步 PXF 配置。

pxf cluster syncPXF 在后台运行时,通过操作系统用户与 HDFS 进行交互,而不是数据库用户。因此,Ranger 中的 HDFS 权限控制时基于操作系统用户的。

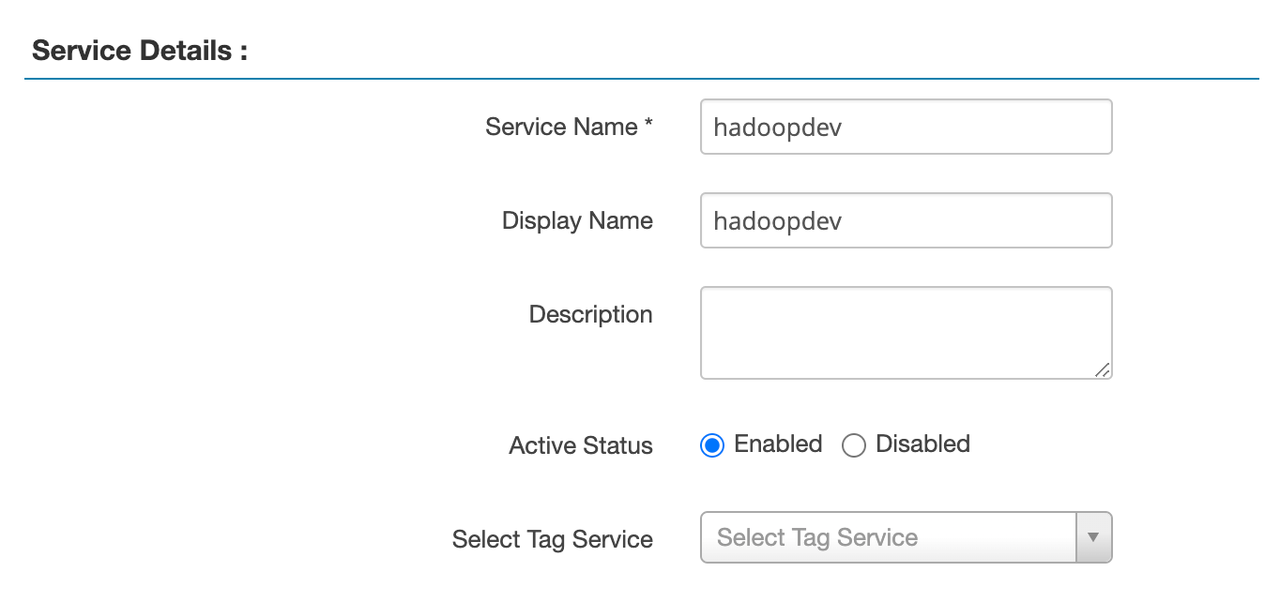

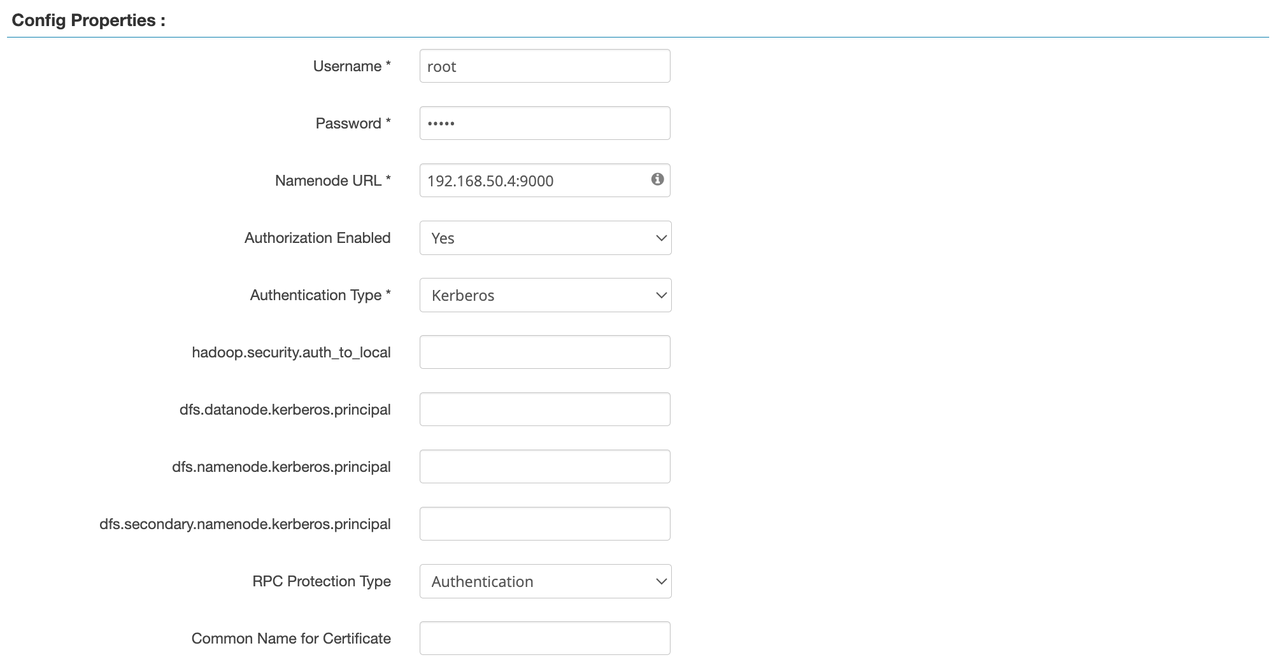

1)填写 HDFS Service 配置

Username:root

Username:rootcore-site.xml 文件填写)

在 Ranger 中,Kerberos 和 Simple 的区别是:

Kerberos:使用票证和加密方式进行身份验证,安全性高,适合生产环境,特别是需要跨多个系统进行认证的场景。

Simple:基于用户名和密码的简单验证方式,安全性较低,适合开发或测试环境。

保存服务配置。

注意!

创建完 Service 后,需要重启 Hadoop 的 Ranger Plugin 才能生效!

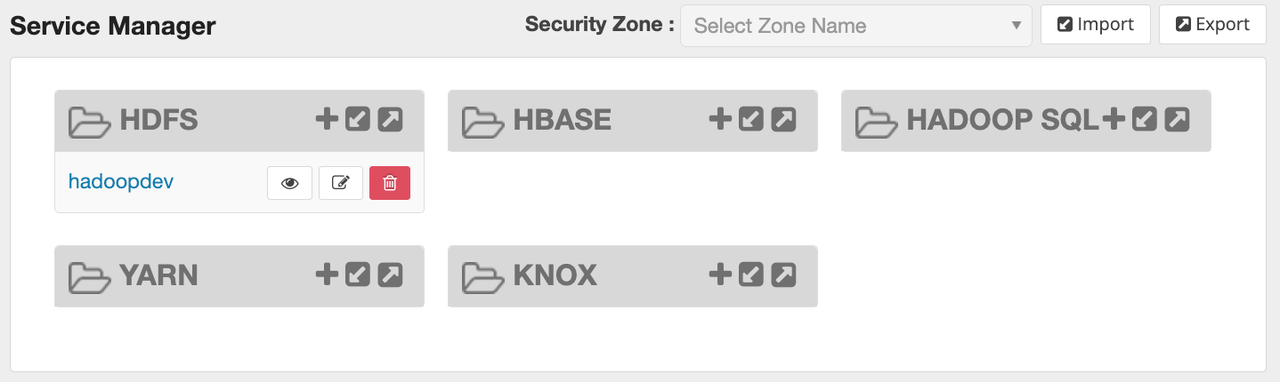

2)在 Ranger 中创建 mxadmin 用户

(一)进入“User / Groups”页面

在菜单中,选择 User / Groups,点击 Add New User

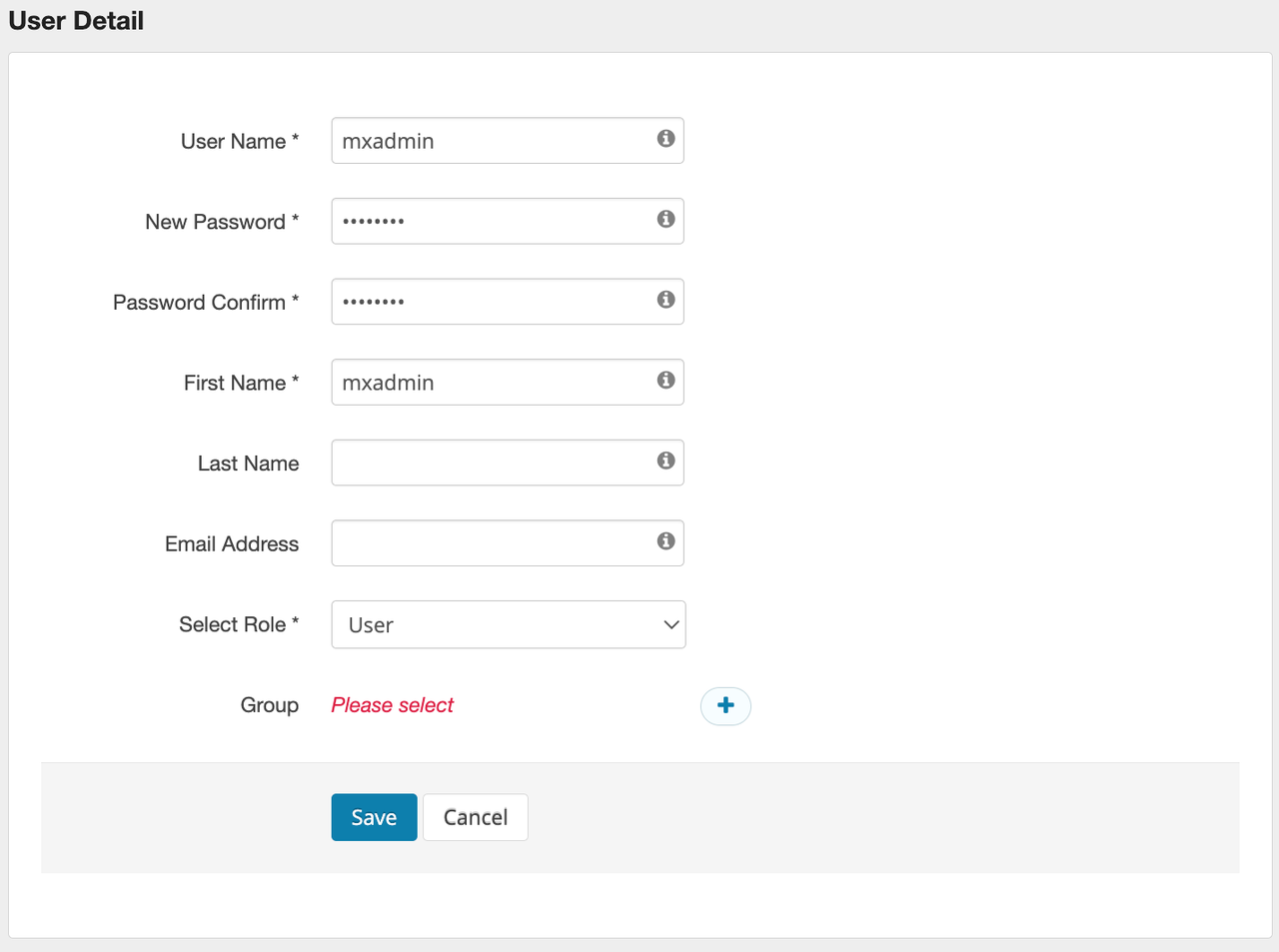

(二)创建 mxadmin 用户

在 “User Name” 输入框,填入 mxadmin,选择 Save 保存用户。

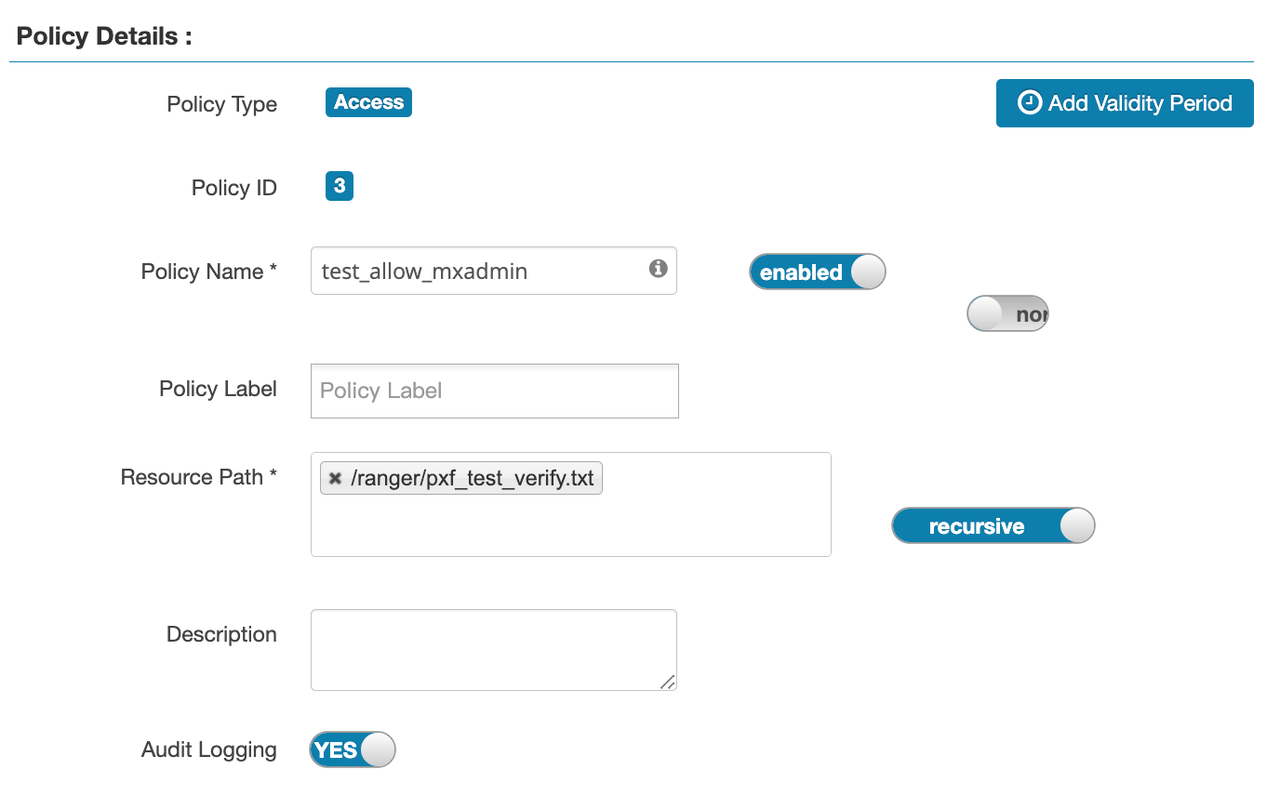

(三)为 mxadmin 用户配置 HDFS 访问权限

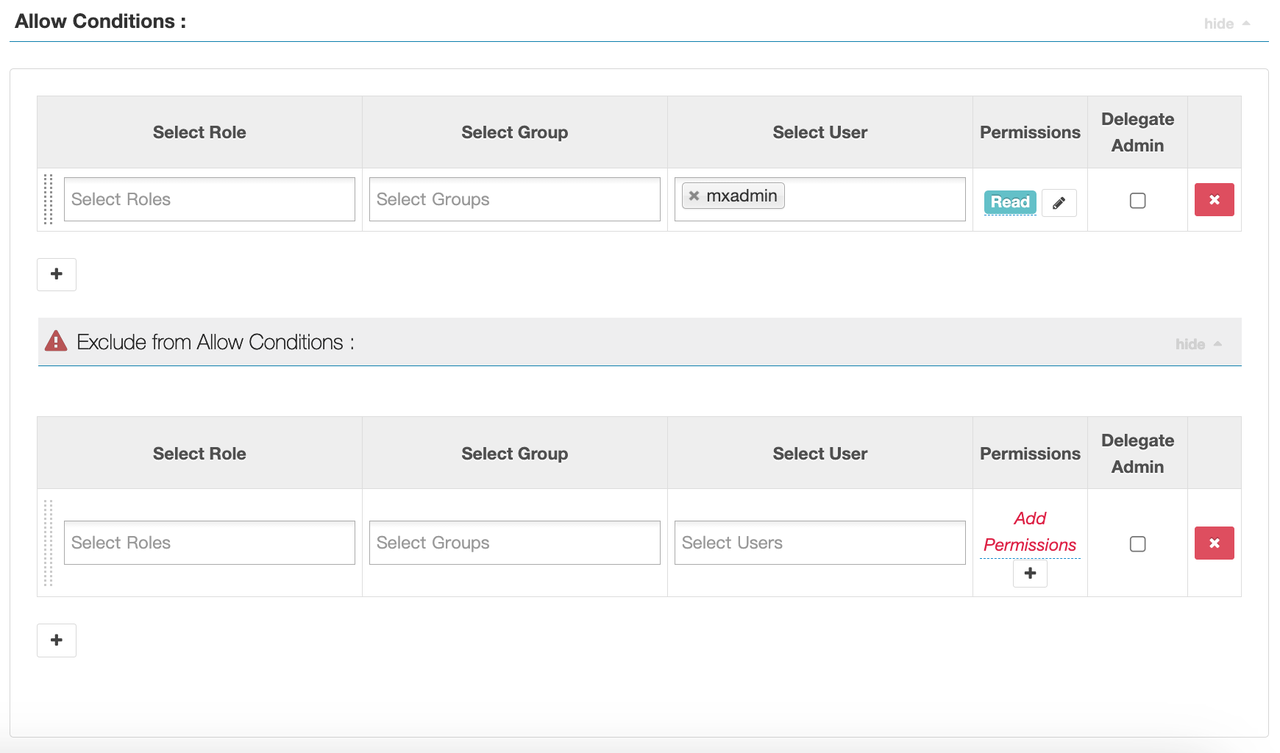

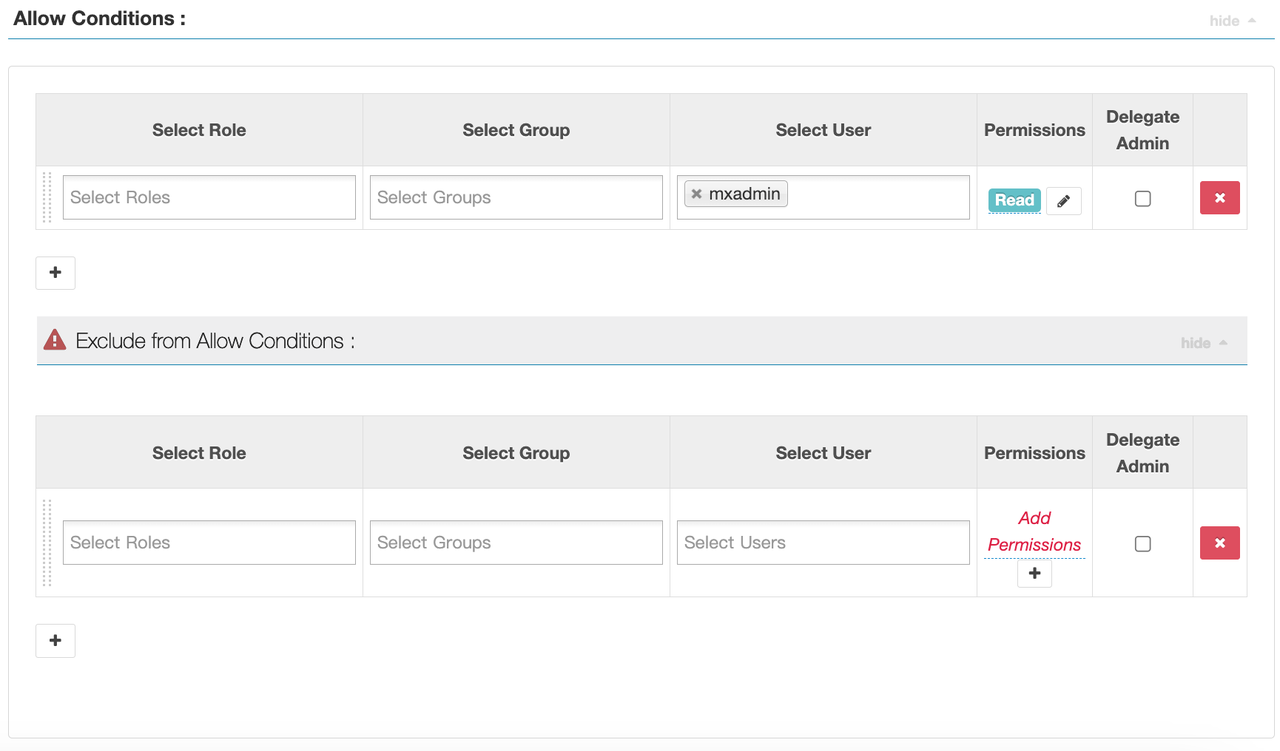

在 Ranger 中,转到 hadoop_hdfs 服务,点击 Add New Policy。

配置如下:

Policy Name : test_allow_mxadmin

Resource Path : /ranger/pxf_test_verify.txt

User :

User : mxadmin

Permissions : read

保存策略。

保存策略。

一、创建测试库

create database mxadmin;

#切换到mxadmin库

\c mxadmin二、 创建插件

在 YMatrix 中启用 PXF 插件,允许 YMatrix 访问 HDFS 数据。

执行以下命令创建 PXF 插件

create extension pxf_fdw;三、创建 FDW Server

创建一个外部数据源连接 Hadoop 集群的文件系统。

执行以下 SQL 命令创建 FDW Server:

CREATE SERVER single_hdfs FOREIGN DATA WRAPPER hdfs_pxf_fdw OPTIONS ( config 'single_hdfs' );四、创建 FDW User Mapping

配置 YMatrix 用户与 FDW 服务器的映射,执行以下 SQL 命令。

CREATE USER MAPPING FOR mxadmin SERVER single_hdfs;五、创建 FDW Foreign Table

创建一个外部表,将 HDFS 上的文件映射到 YMatrix 数据库中, 执行以下 SQL 命令创建 Foreign Table:

CREATE FOREIGN TABLE pxf_hdfs_verify (

name text,

month text,

count int,

amount float8

)

SERVER single_hdfs

OPTIONS (

resource '/ranger/pxf_test_verify.txt',

format 'text',

delimiter ','

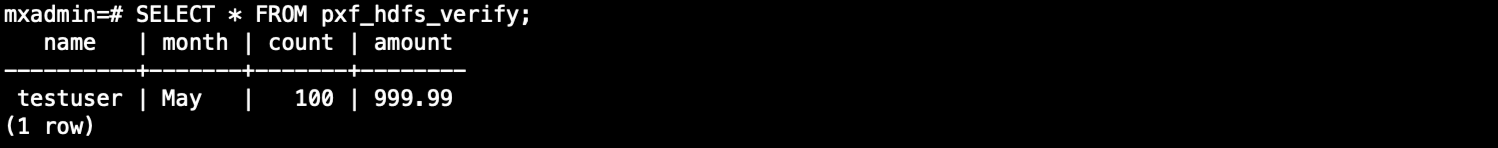

);六、查询外部表

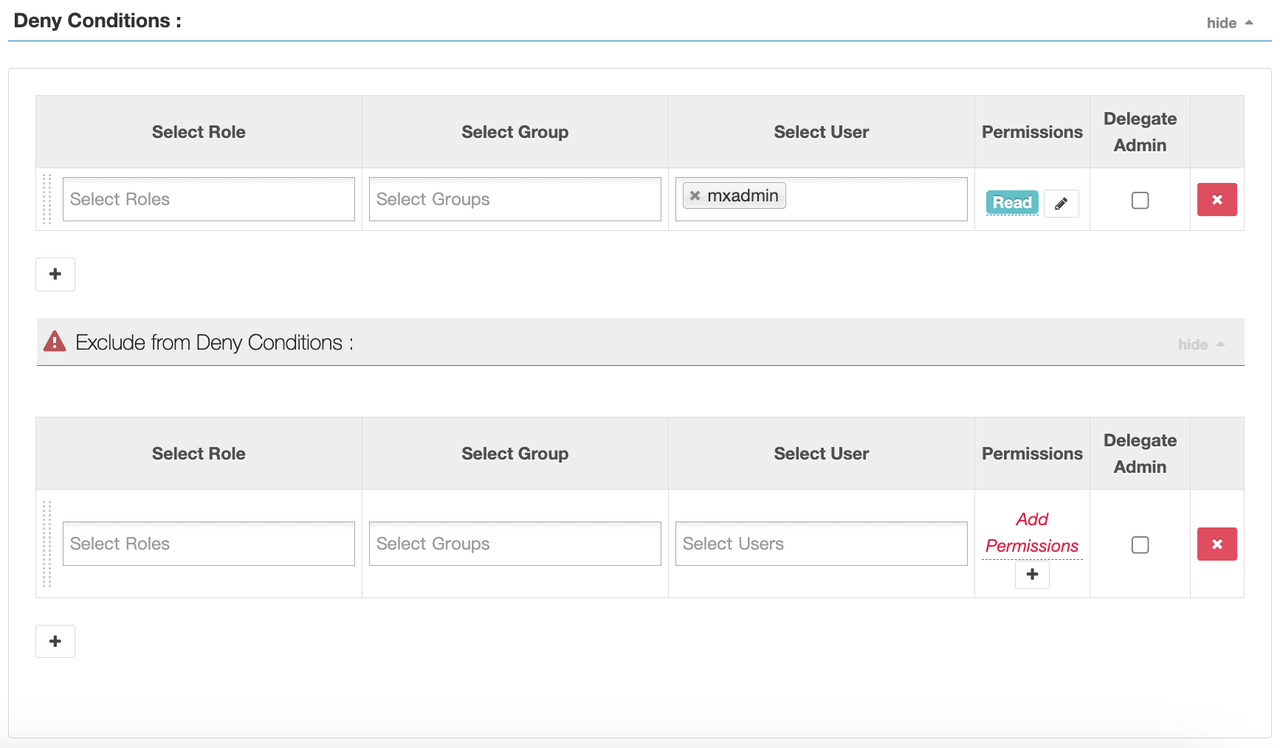

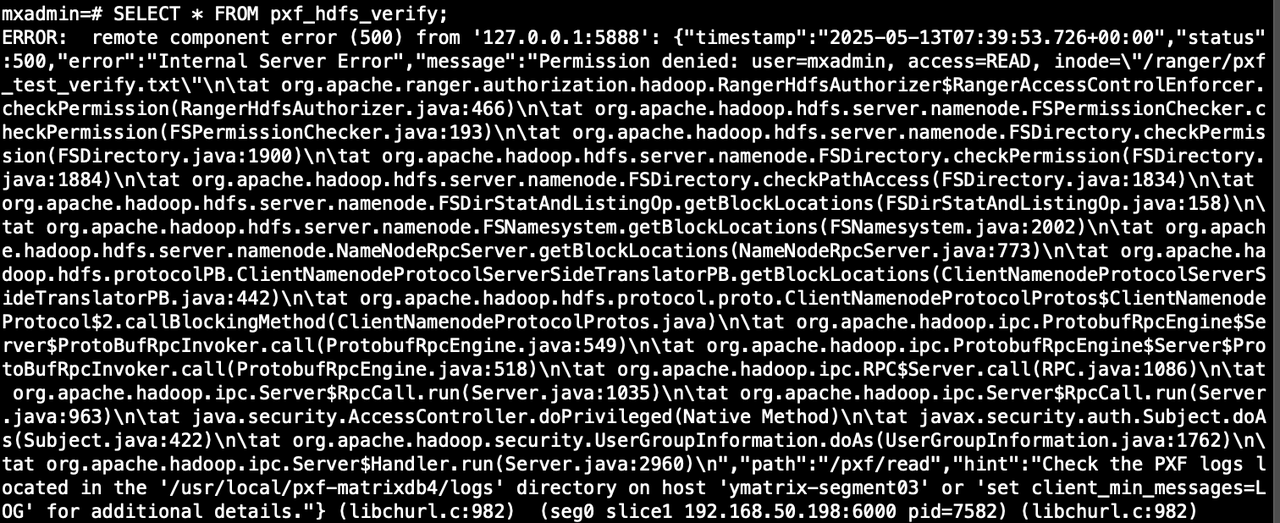

1)当 ranger 配置的是 Deny Conditions

SELECT * FROM pxf_hdfs_verify;

2)当 ranger 配置的是 Allow Conditions

SELECT * FROM pxf_hdfs_table;